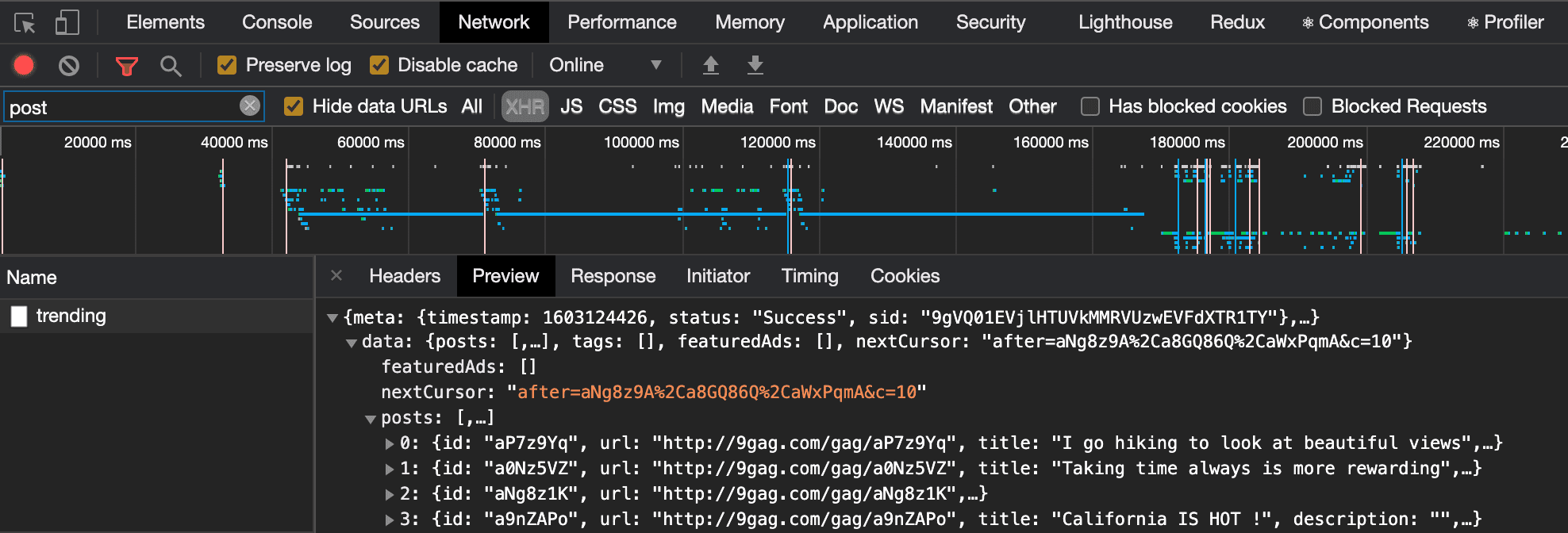

Web scraping is a method by which we can automate the information gathering over the internet. While it can be a go-to approach for gathering the text data and converting it into a tabular meaningful bundle, it can come with its own challenges as well. Two major challenges are variety and durability. A very common flow that web applications use to load their data is to have JavaScript make asynchronous requests (AJAX) to an API server (typically REST or GraphQL) and receive their data back in JSON format, which then gets rendered to the screen. 1,193 Followers, 294 Following, 11 Posts - See Instagram photos and videos from abdou now online (@abdoualittlebit). NOW OUT: My JavaScript Web Scraping Course!:)If yo.

Sometimes you need to scrape content from a website and a fancy scraping setup would be overkill.

Maybe you only need to extract a list of items on a single page, for example.

In these cases you can just manipulate the DOM right in the Chrome developer tools.

Extract List Items From a Wikipedia Page

Let's say you need this list of baked goods in a format that's easy to consume: https://en.wikipedia.org/wiki/List_of_baked_goods

Open Chrome DevTools and copy the following into the console:

Now you can select the JSON output and copy it to your clipboard.

A More Complicated Example

Let's try to get a list of companies from AngelList (https://angel.co/companies?company_types[]=Startup&locations[]=1688-United+States

This case is a slightly less straightforward because we need to click 'more' at the bottom of the page to fetch more search results.

Open Chrome DevTools and copy:

Skyhawke mobile phones & portable devices driver. You can access the results with:

Some Notes

- Chrome natively supports ES6 so we can use things like the spread operator

- We spread

[..document.querySelectorAll]because it returns a node list and we want a plain old array.

- We spread

- We wrap everything in a setTimeout loop so that we don't overwhelm Angel.co with requests

- We save our results in localStorage with

window.localStorage.setItem('__companies__', JSON.stringify(arr))so that if we disconnect or the browser crashes, we can go back to Angel.co and our results will be saved. - We must serialize data before saving it to localStorage.

Scraping With Node

These examples are fun but what about scraping entire websites?

We can use node-fetch and JSDOM to do something similar.

Typescript Web Scraper

Just like before, we're not using any fancy scraping API, we're 'just' using the DOM API. But since this is node we need JSDOM to emulate a browser.

Scraping With NightmareJs

Nightmare is a browser automation library that uses electron under the hood.

The idea is that you can spin up an electron instance, go to a webpage and use nightmare methods like type and click to programmatically interact with the page.

For example, you'd do something like the following to login to a Wordpress site programmatically with nightmare:

Nightmare is a fun library and might seem like 'magic' at first.

But the NightmareJs methods like wait, type, click, are just syntactic sugar on DOM (or virtual DOM) manipulation.

For example, here's the source for the nightmare method refresh:

Web Scraping Js

In other words, window.location.reload wrapped in their evaluate_now method. So with nightmare, we are spinning up an electron instance (a browser window), and then manipulating the DOM with client-side javascript. Everything is the same as before, except that nightmare exposes a clean and tidy API that we can work with.

Why Do We Need Electron?

Why is Nightmare built on electron? Why not just use Chrome?

This brings us to the interesting alternative to nightmare, Chromeless.

Chromeless attempts to duplicate Nightmare's simple browser automation API using Chrome Canary instead of Electron.

This has a few interesting benefits, the most important of which is that Chromeless can be run on AWS Lambda. It turns out that the precompiled electron binaries are just too large to work with Lambda.

Here's the same example we started with (scraping companies from Angel.co), using Chromeless: O2micro 1394 driver.

Web Scraping Json

To run the above example, you'll need to install Chrome Canary locally. Here's the download link.

Web Scraping Json Python

Next, run the above two commands to start Chrome canary headlessly.

Finally, install the npm package chromeless.